Disclaimer – The use of nested virtualisation is not a supported topology

Harvester is an open-source HCI solution aimed at managing Virtual Machines, similar to vSphere and Nutanix, with key differences including (but not limited to):

- Fully Open Source

- Leveraging Kubernetes-native technologies

- Integration with Rancher

Testing/evaluating any hyperconverged solution can be difficult – It usually requires having dedicated hardware as these solutions are designed to work directly on bare metal. However, we can circumvent this by leveraging nested virtualisation – something which may be familiar with a lot of homelabbers (myself included) – which involves using an existing virtualisation solution provision workloads that also leverage virtualisation technology.

Step 1 – Planning

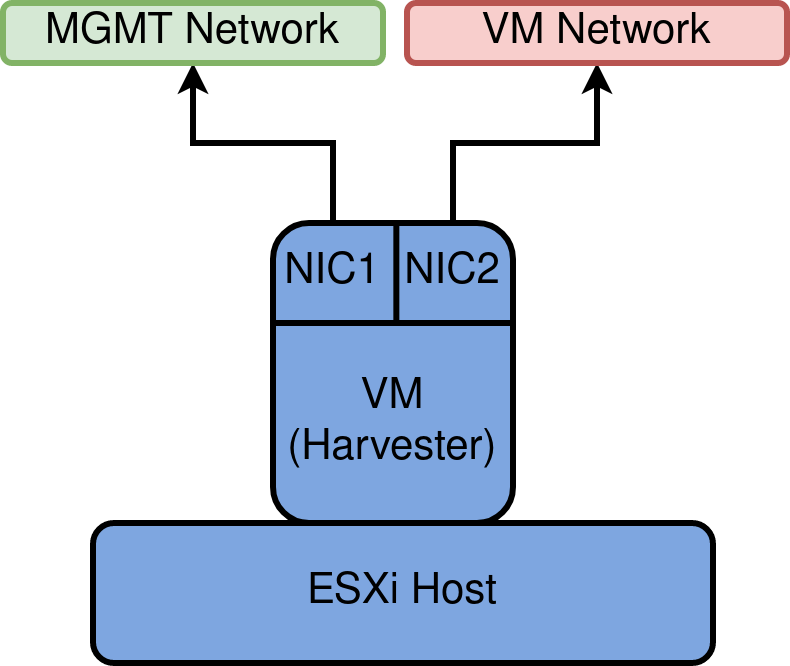

To mimic what a production-like system may look like, two NICs will be leveraged – one that facilitates management traffic, and the other for Virtual Machine traffic, as depicted below

MGMT network and VM Network will manifest as VDS Port groups.

Also, download and make available the latest ISO for harvester

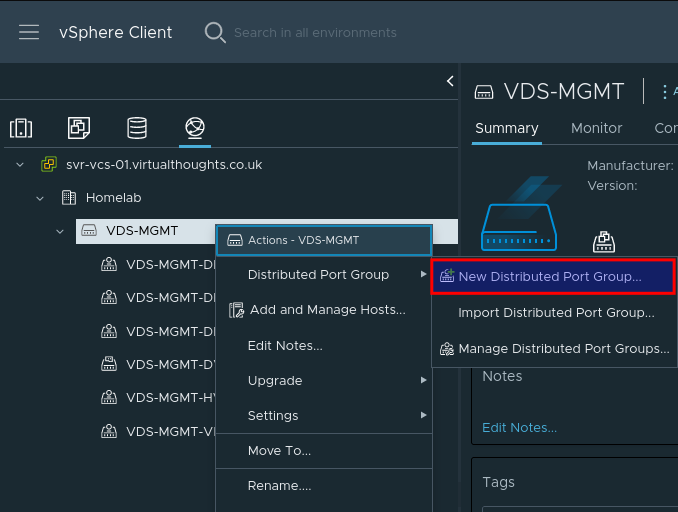

Step 2 – Create vDS Port Groups

It is highly recommended to create new Distributed Port groups for this exercise, mainly because of the configuration we will be applying in the next step.

Create a new vDS Port Group:

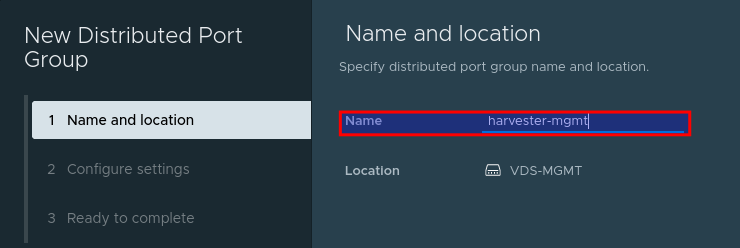

Give the port group a name, such as harvester-mgmt

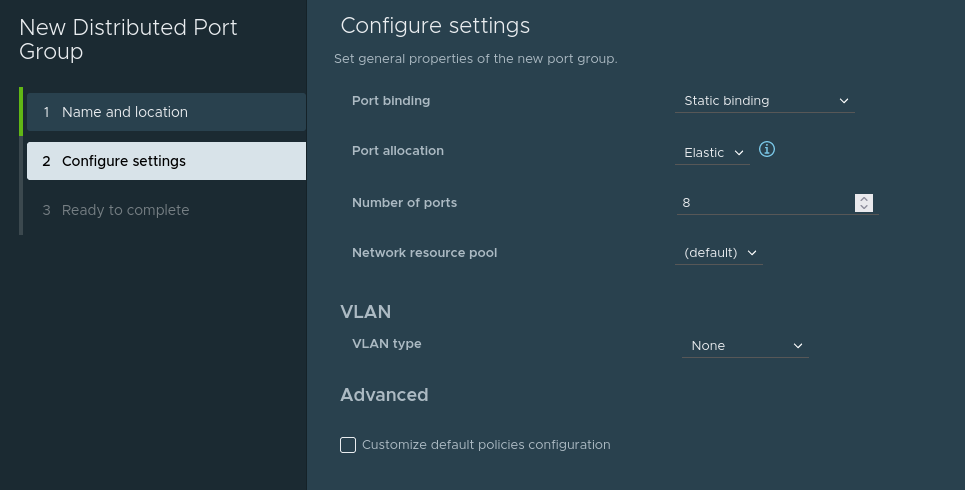

Adjust any configuration (ie VLAN ID) to match your environment (if required). Or accept the defaults:

Repeat this process to create the harvester-vm Port group. We should now have two port groups:

- harvester-mgmt

- harvester-vm

Step 3 – Enable MAC learning on Port groups [Critical]

William Lam has an excellent post on how to accomplish this. This is required for Harvester (or any hypervisor) to function correctly when operating in a nested environment.

Set-MacLearn -DVPortgroupName @("harvester-mgmt") -EnableMacLearn $true -EnablePromiscuous $false -EnableForgedTransmit $true -EnableMacChange $false

Set-MacLearn -DVPortgroupName @("harvester-vm") -EnableMacLearn $true -EnablePromiscuous $false -EnableForgedTransmit $true -EnableMacChange $false

Step 4 – Creating a Harvester VM

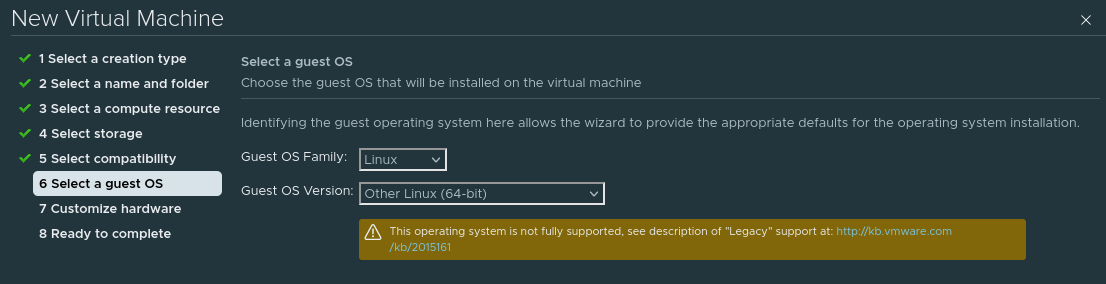

Our Harvester VM will operate like any other VM, with some important differences. In vSphere, go through the standard VM creation wizard to specify the Host/Datastore options. When presented with the OS type, select Other Linux (64 bit).

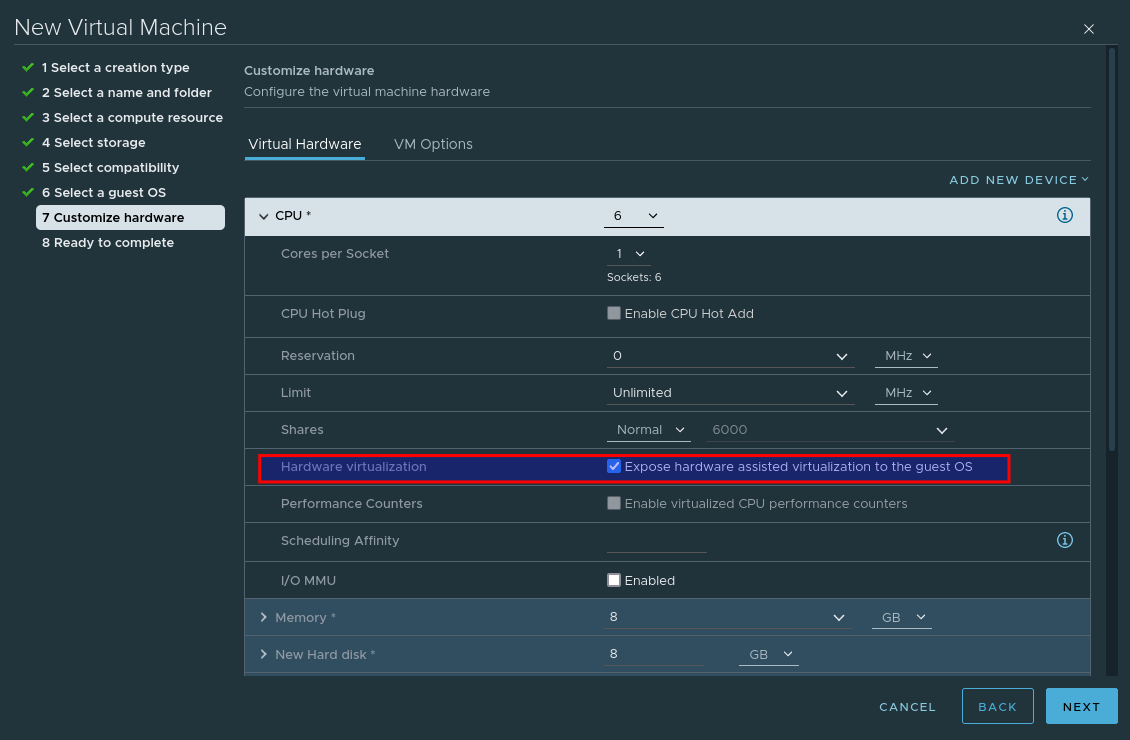

When customising the hardware, select Expose hardware assisted virtualization to the guest OS – This is crucial, as without this selected Harvester will not install.

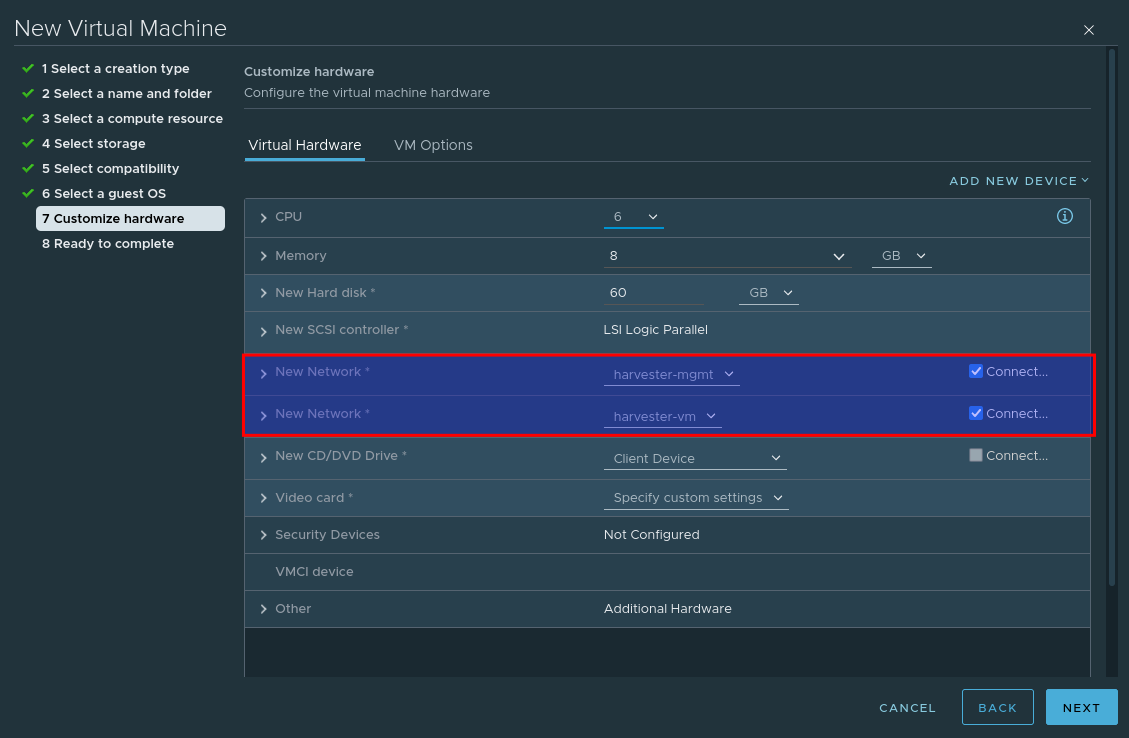

Add an additional network card so that our VM leverages both previously created port groups:

And finally, mount the Harvester ISO image.

Step 4 – Install Harvester

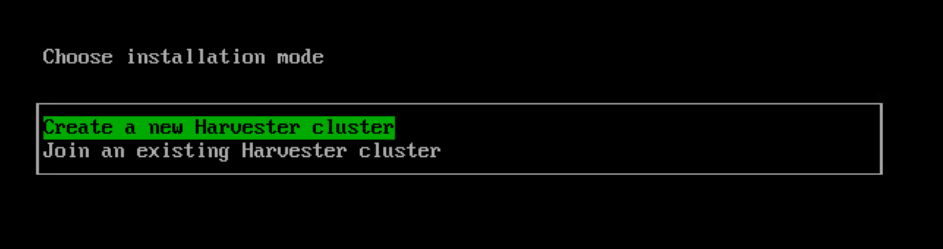

Power on the VM and providing the ISO is mounted and connected, you should be presented with the install screen. As this is the first node, select create a new Harvester Cluster

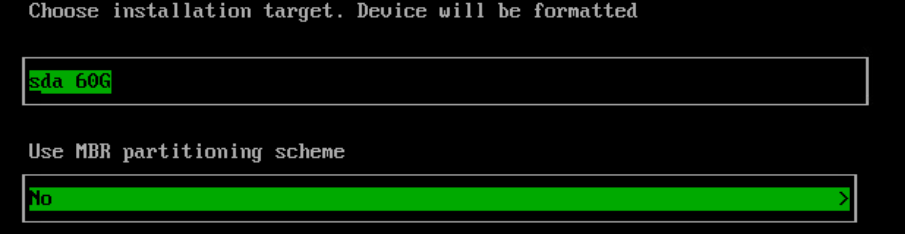

Select the Install target and optional MBR partitioning

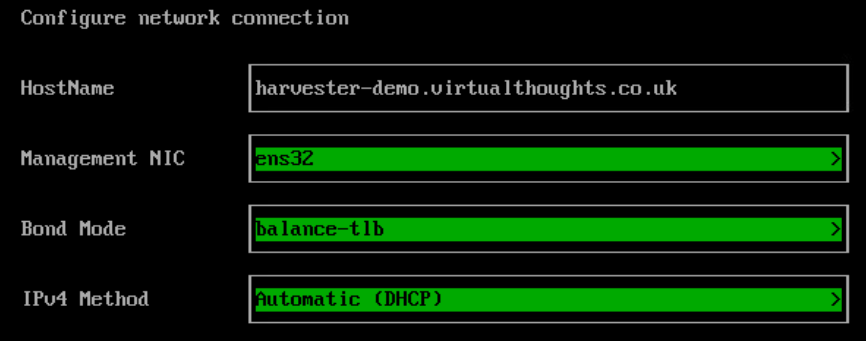

Configure the hostname, management nic and IP assignment options.

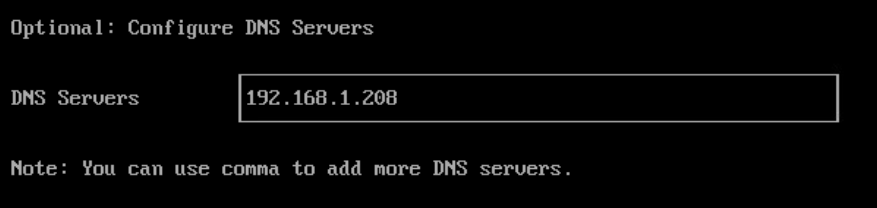

Configure the DNS config:

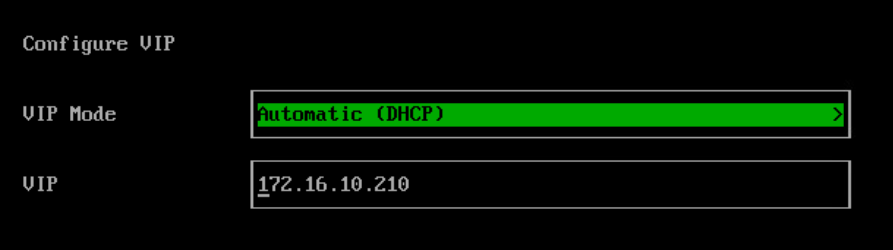

Configure the Harvester VIP. This is what we will use to access the Web UI. This can also be obtained via DHCP if desired.

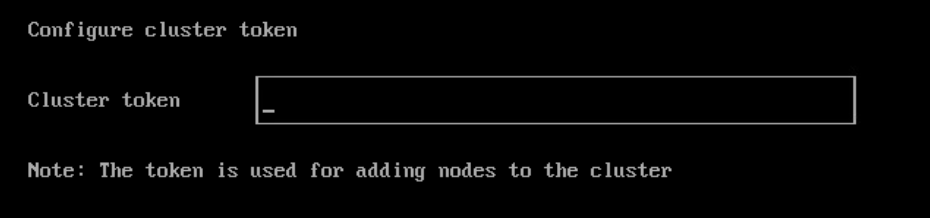

Configure the cluster token, this is required if you want to add more nodes later on.

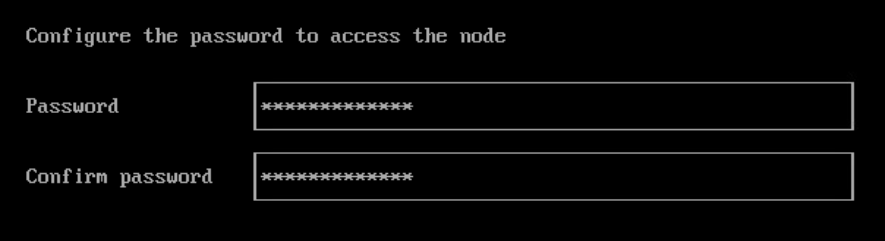

Configure the local Password:

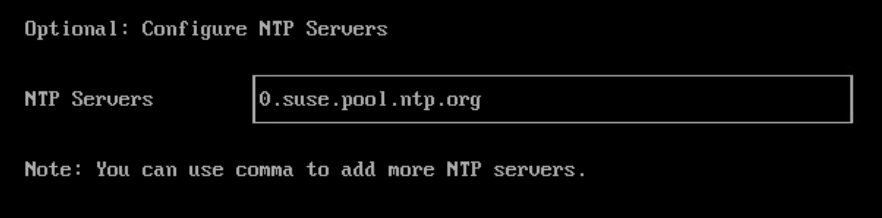

Configure the NTP server Address:

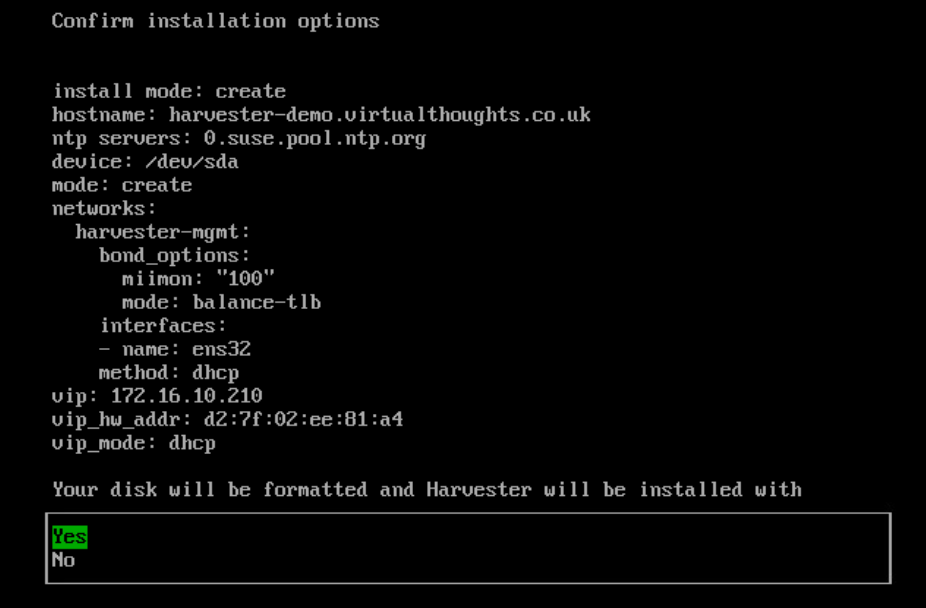

If desired, the subsequent options facilitate importing SSH keys, reading a remote config, etc which are optional. A summary will be presented before the install begins:

Proceed with the install.

Note : After a reboot, it may take a few minutes before harvester reports as being in a ready state – Once it does, navigate to the reported management URL.

At which point you will be prompted to reset the admin password

Step 5 – Configure VM Network

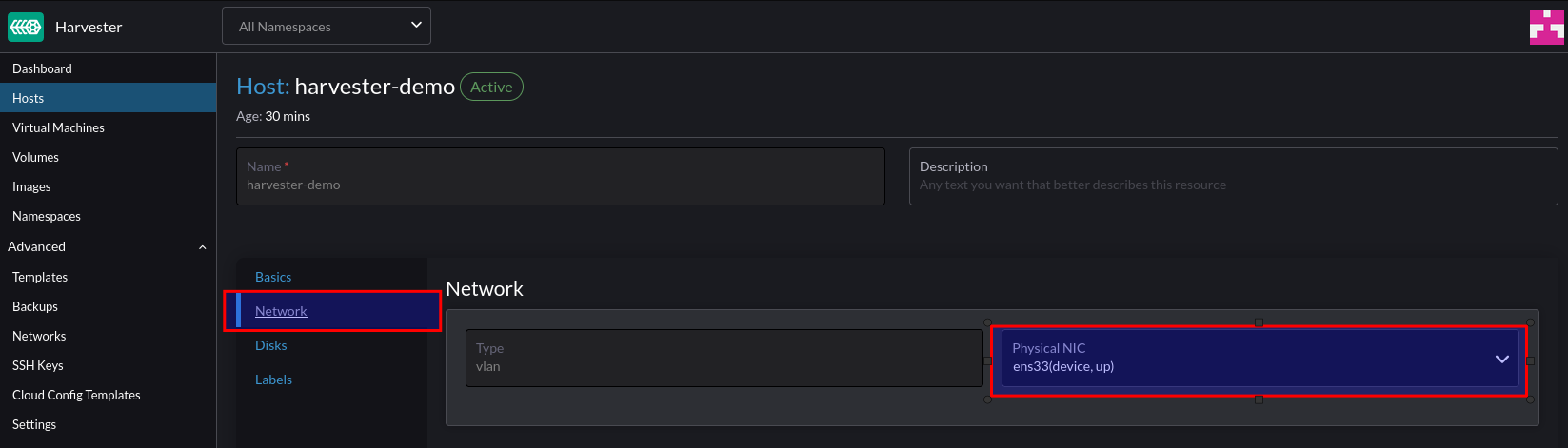

Once logged in to Harvester navigate to Hosts > Edit Config

Configure the secondary NIC to the VLAN network (our VM network)

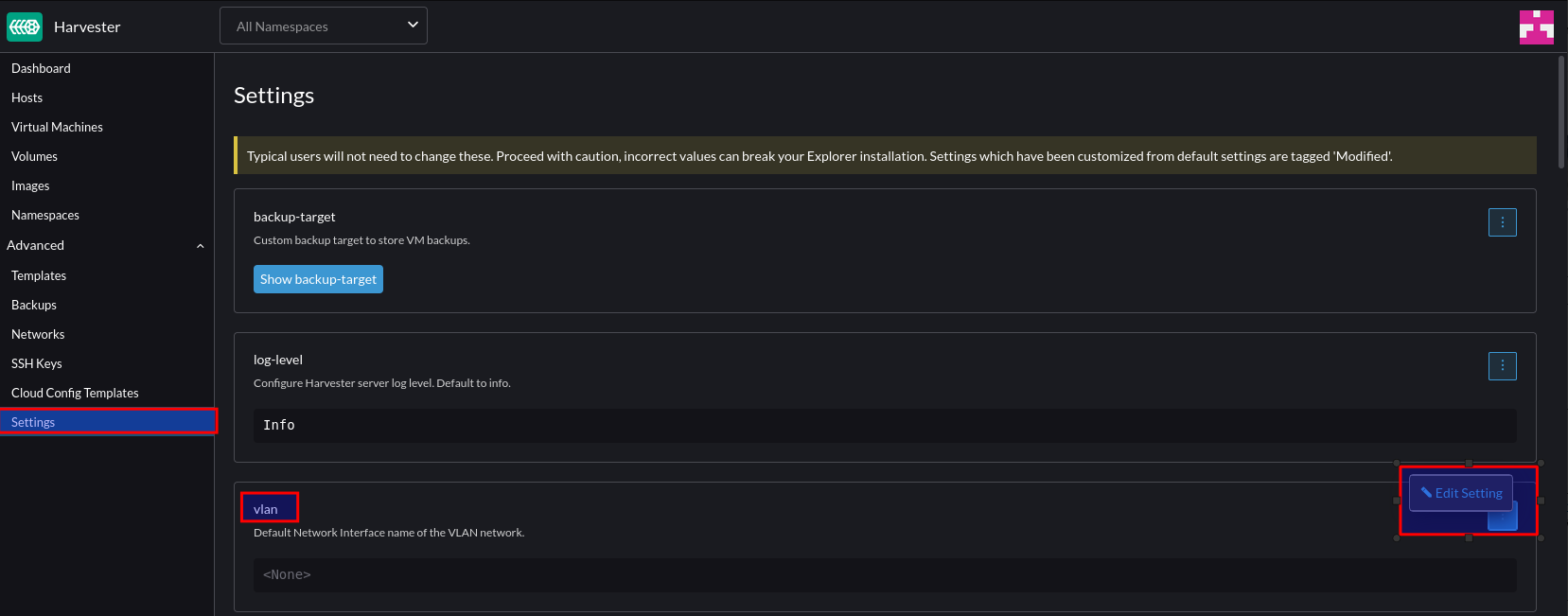

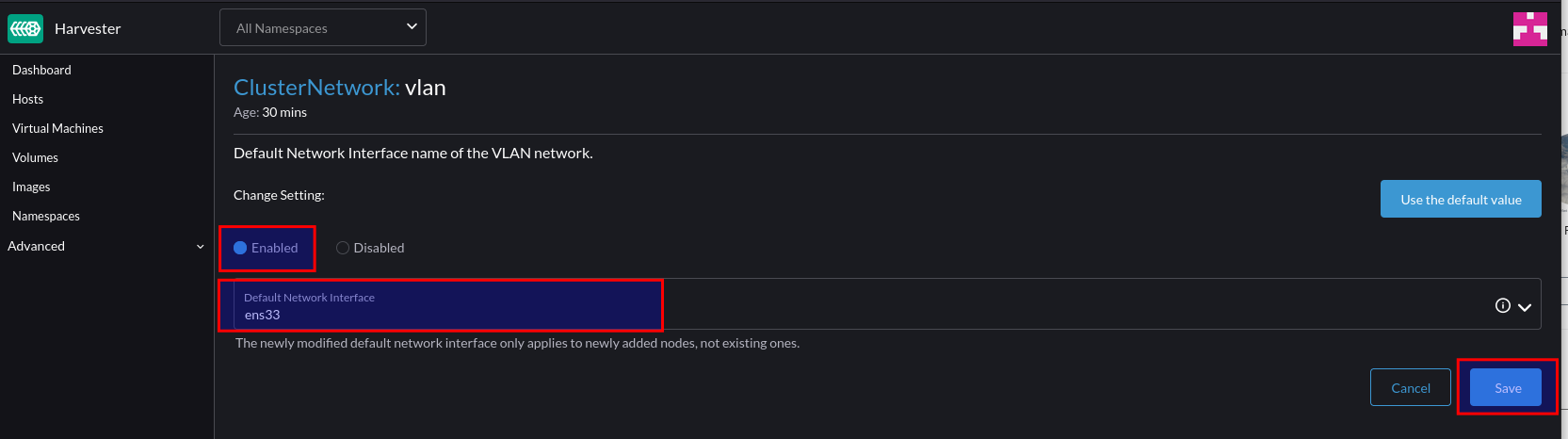

Navigate to Settings > VLAN > Edit

Click “Enable” and select the default interface to the secondary interface. This will be the default for any new nodes that join the cluster.

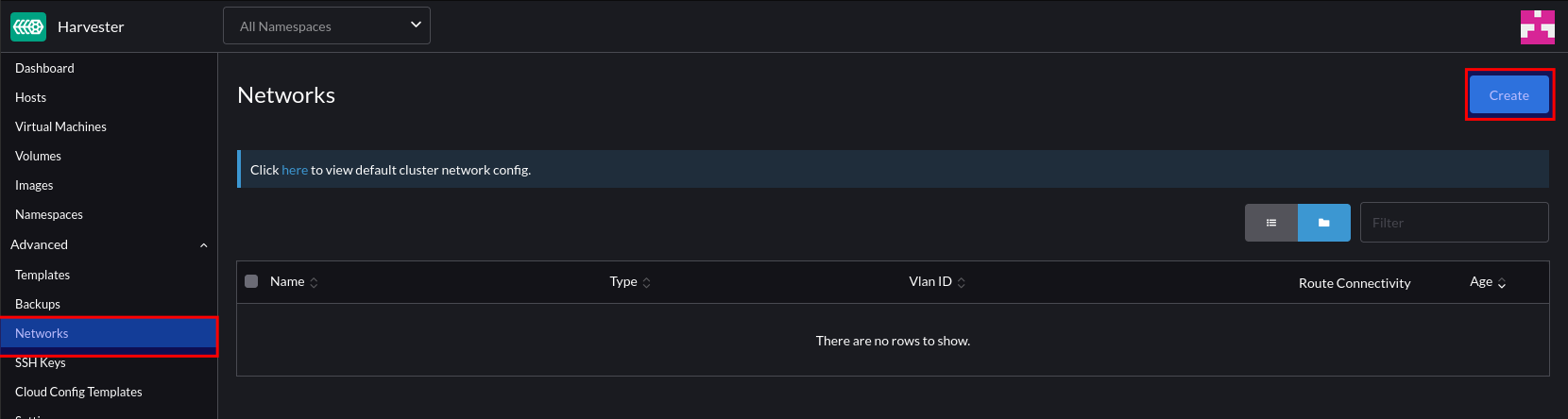

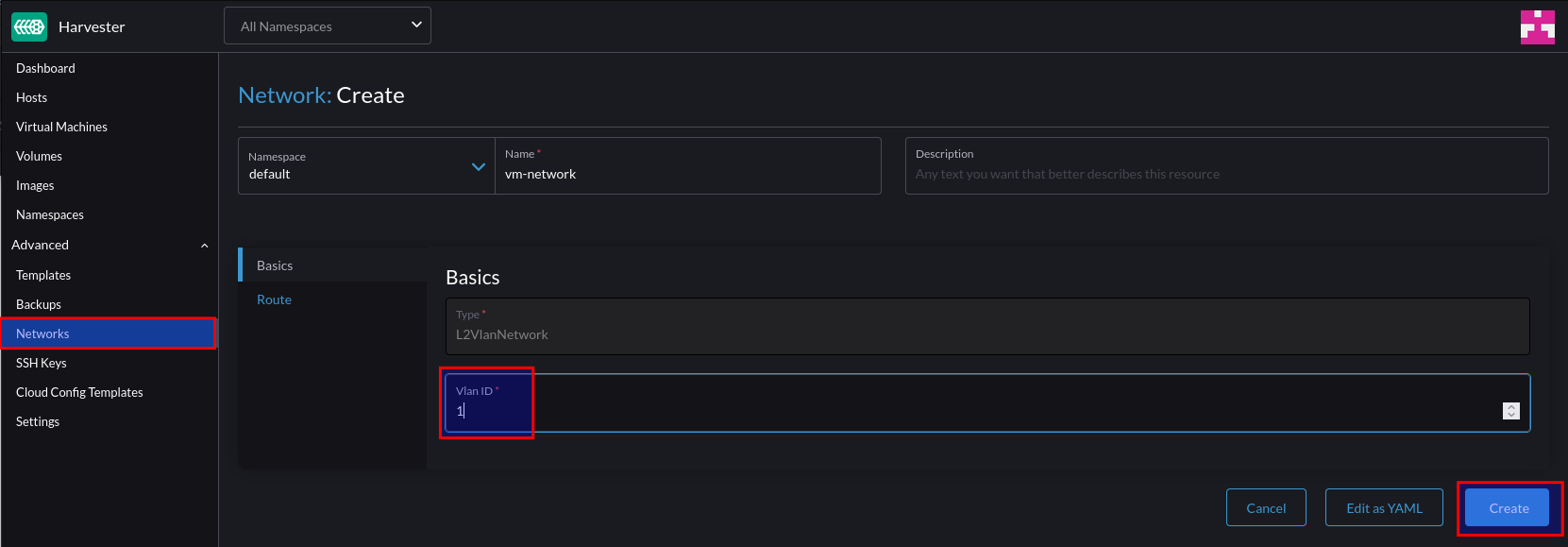

To create a network for our VM’s to reside in, select Network > Create:

Give this network a name and a VLAN ID. Note – you can supply VLAN ID 1 if you’re using the native/default VLAN.

Step 6 – Test VM Network

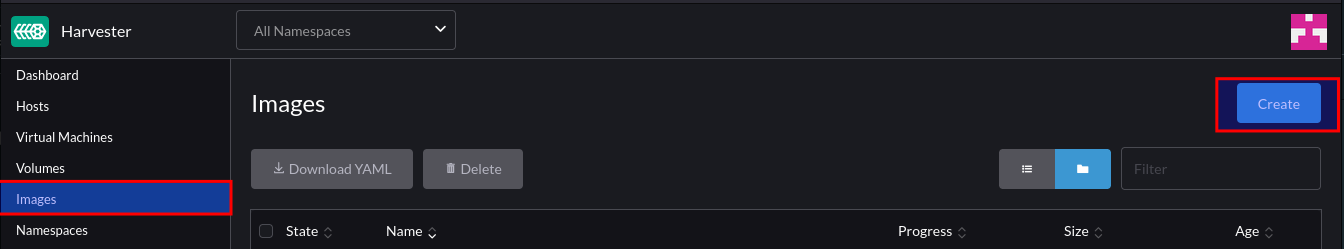

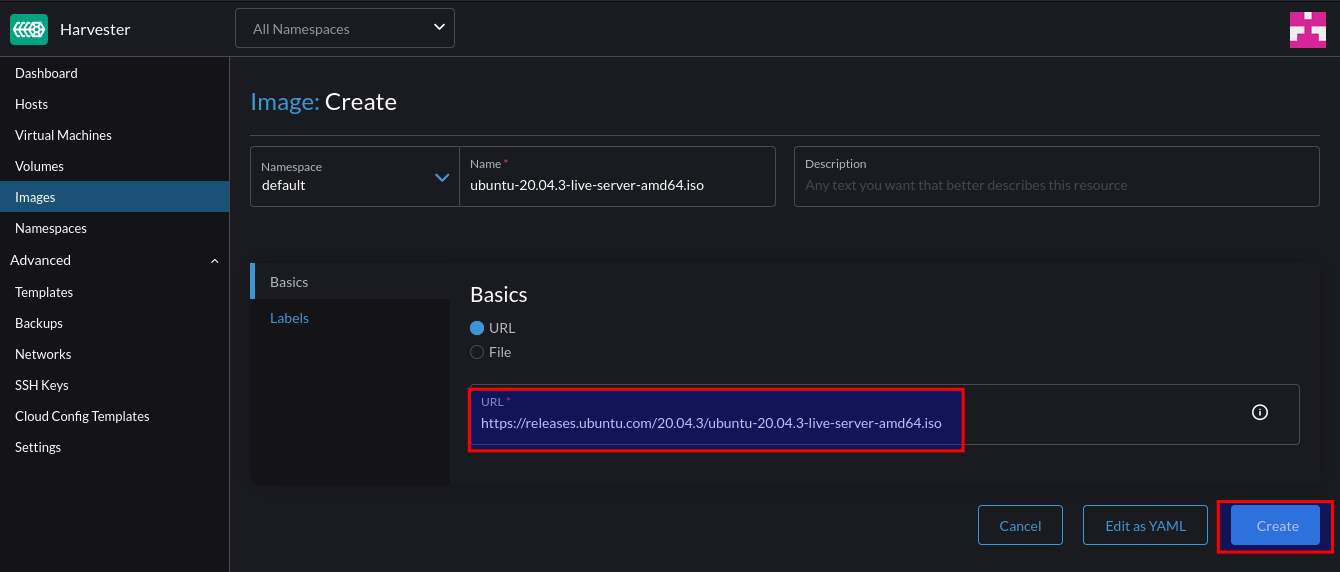

Firstly, create a new image:

For this example, we can use an ISO image. After supplying the URL Harvester will download and store the image:

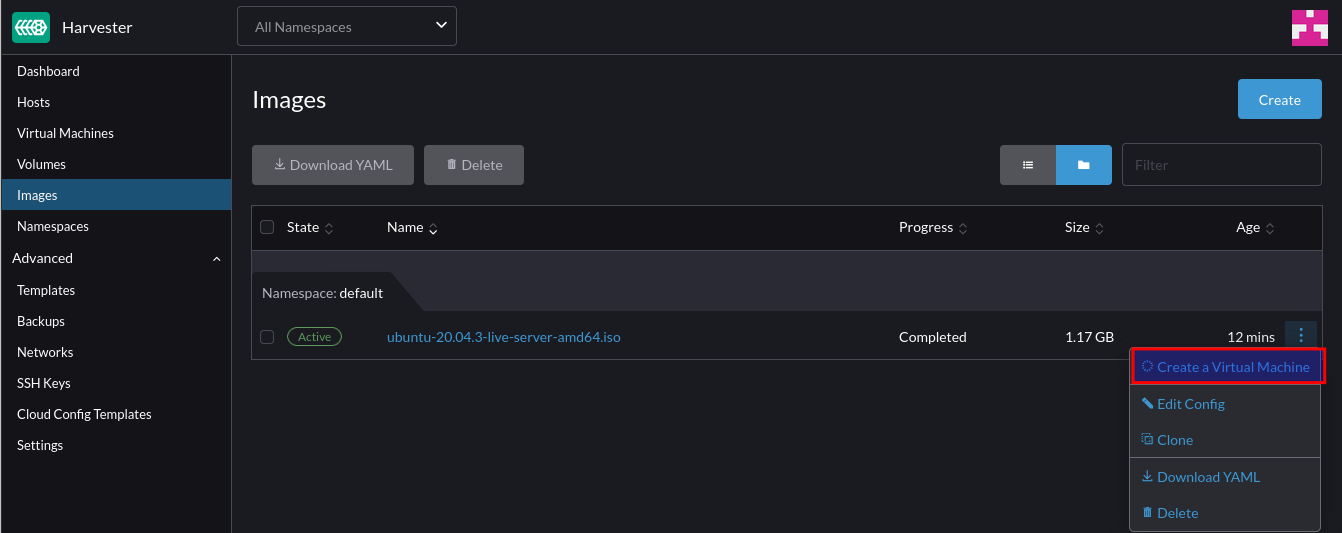

After downloading, we can create a VM from it:

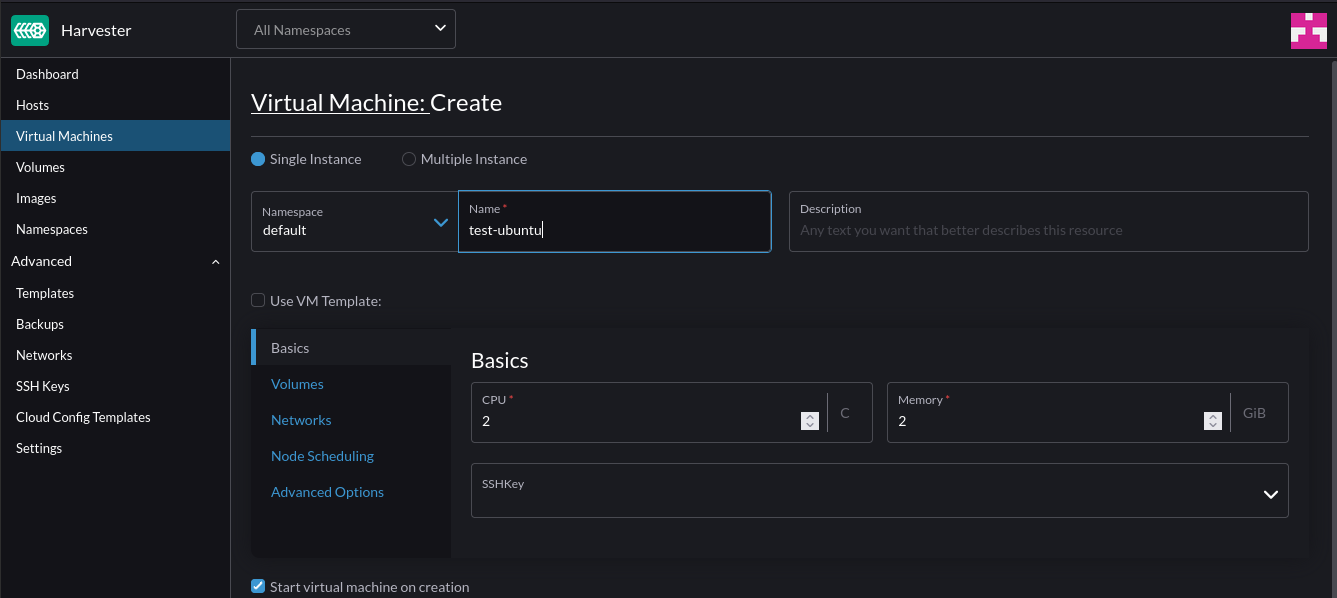

Specify the VM specs (CPU and Mem)

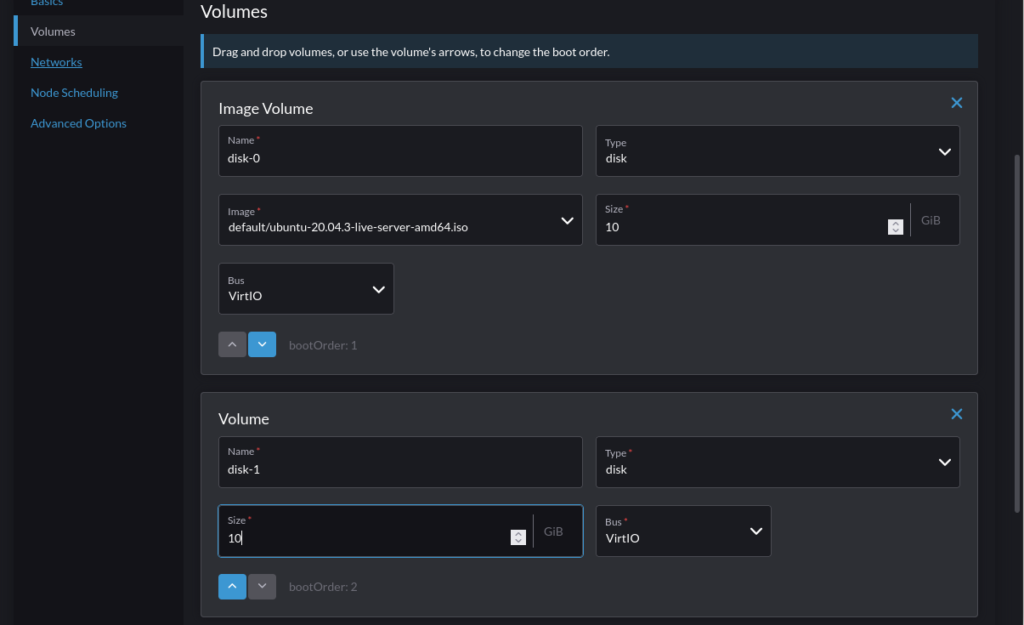

Under Volumes, add an additional volume to act as the installation target for the OS (Or leave if purely wanting to use a live ISO):

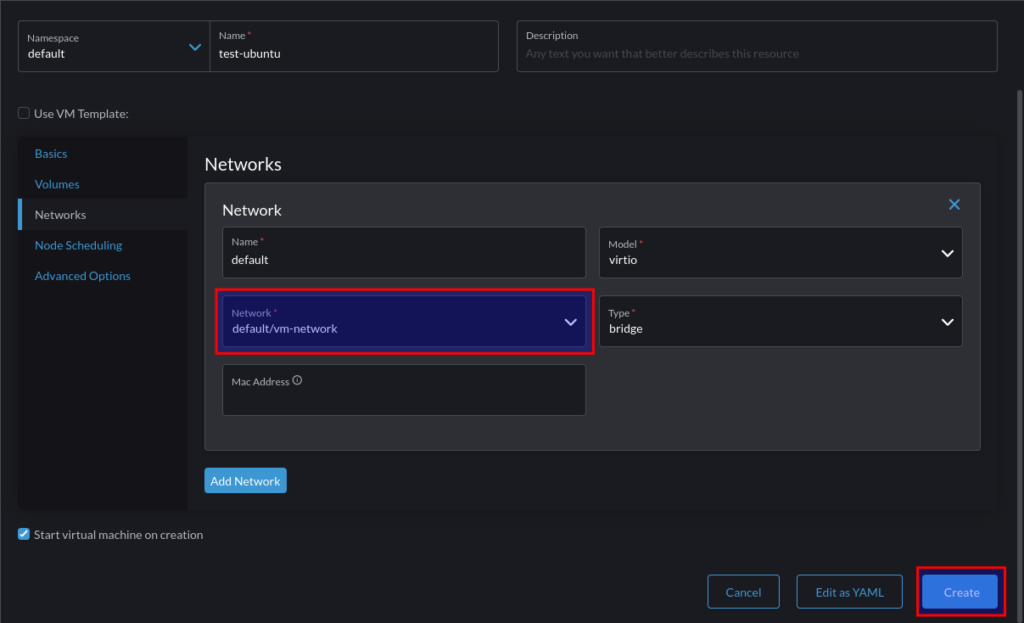

Under Networks, change the selection to the VM network that was previously created and click “Create”:

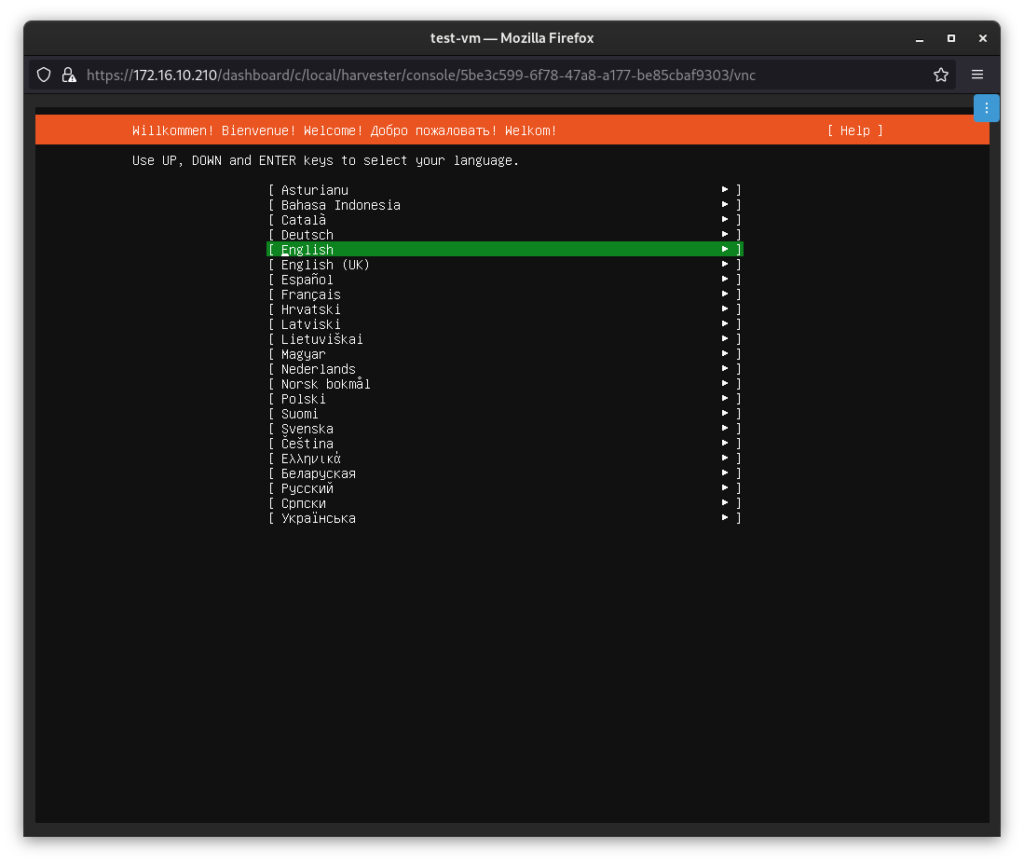

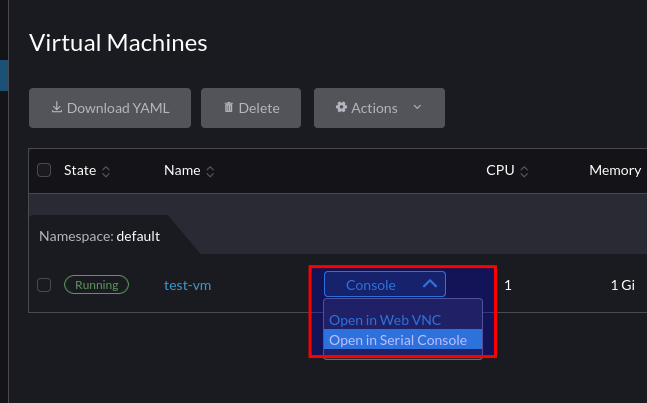

Once the VM is in running state, we can take a VNC console to it:

At which point we can interact with it as we would expect with any HCI solution: