Overview

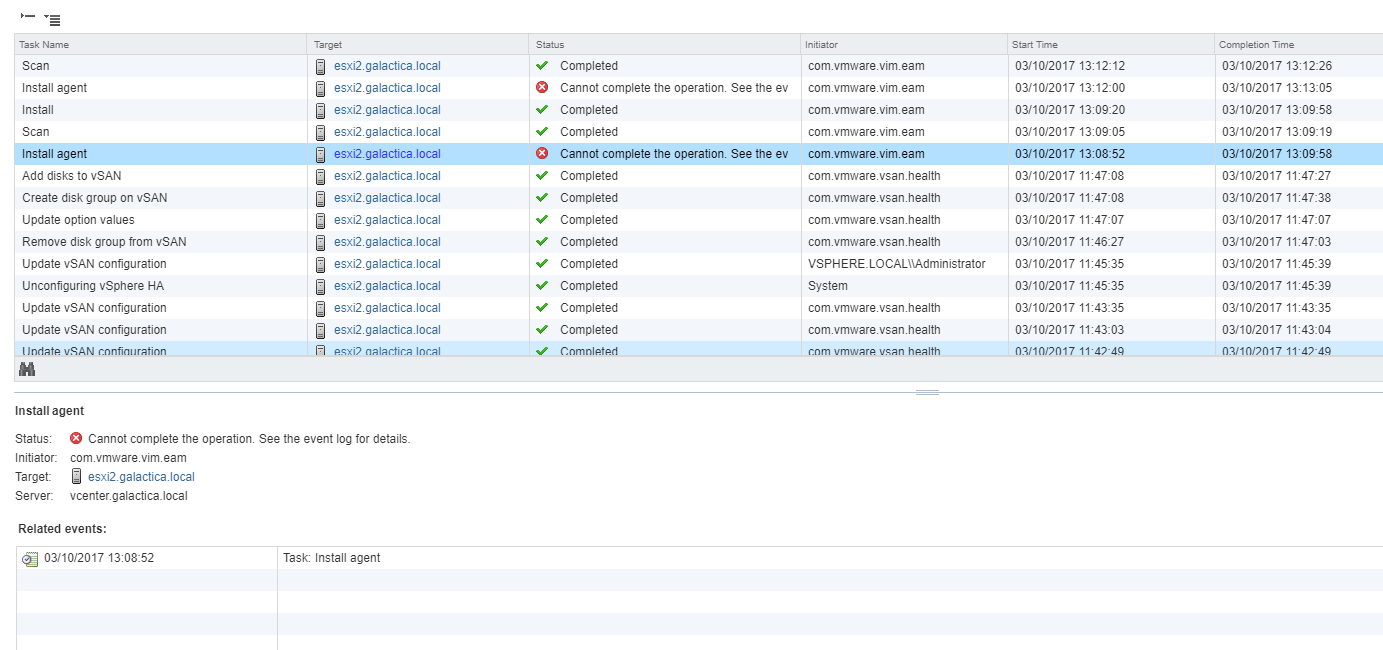

I was recently tasked with migrating a selection of ESXi 5.5 hosts into a new vSphere 6.5 environment. These hosts leveraged Fibre Channel HBA’s for block storage and 2x10Gbe interfaces for all other traffic types. I assumed that doing a vDS detach and resync was not the correct approach to do this, even though some people reported success doing it this way. The /r/vmware Reddit community agreed and later I found a VMware KB article that backs the more widely accepted solution involving moving everything to a vSphere Standard Switch first.

Automating the process

There are already several resources on how to do vDS -> vSS migrations but I fancied trying it myself. I used Virtually Ghetto’s script as a foundation for my own but wanted to add a few changes that were applicable to my specific environment. These included:

- Populating a vSS dynamically by probing the vDS the host was attached to, including VLAN ID tags

- Additionally, add a prefix to differentiate between the vSS and vDS portgroups

- Automating the migration of VM port groups from the vDS to a vSS in a way that would result in no downtime.

Script process

This script performs the migration on a specific host, defined in $vmhost.

- Connect to vCenter Server

- Create a vSS on the host called “vSwitch_Migration”

- Iterate through the vDS portgroups, recreate on the vSS like-for-like, including VLANID tagging (where appropriate).

- Acquire list of VMKernel adaptors

- Move vmnic0 from the vDS to the vSS. At the same time migrate the VMKernel interfaces

- Iterate through all the VM’s on the host, reconfigure port group so it resides in the vSS

- Once all the VM’s have migrated, add the second (and final, in my environment) vmnic to the vSS

- At this point nothing specific to this host resides on the vDS, therefore remove the vDS from this host

If you plan to run these scripts in your environment, test first in a non-production environment.

Write-Host "Connecting to vCenter Server" -foregroundcolor Green

Connect-VIServer -Server "vCenterServer" -User administrator@vsphere.local -Pass "somepassword" | Out-Null

# Individual ESXi host to migrate from vDS to VSS

$vmhost = "192.168.1.20"

Write-Host "Host selected: " $vmhost -foregroundcolor Green

# Create a new vSS on the host

$vss_name = New-VirtualSwitch -VMHost $vmhost -Name vSwitch_Migration

Write-Host "Created new vSS on host" $vmhost "named" "vSwitch_Migration" -foregroundcolor Green

#VDS to migrate from

$vds_name = "MyvDS"

$vds = Get-VDSwitch -Name $vds_name

#Probe the VDS, get port groups and re-create on VSS

$vds_portgroups = Get-VDPortGroup -VDSwitch $vds_name

foreach ($vds_portgroup in $vds_portgroups)

{

if([string]::IsNullOrEmpty($vds_portgroup.vlanconfiguration.vlanid))

{

Write-Host "No VLAN Config for" $vds_portgroup.name "found" -foregroundcolor Green

$PortgroupName = $vds_portgroup.Name

New-VirtualPortGroup -virtualSwitch $vss_name -name "VSS_$PortgroupName" | Out-Null

}

else

{

Write-Host "VLAN config present for" $vds_portgroup.name -foregroundcolor Green

$PortgroupName = $vds_portgroup.Name

New-VirtualPortGroup -virtualSwitch $vss_name -name "VSS_$PortgroupName" -VLanId $vds_portgroup.vlanconfiguration.vlanid | Out-Null

}

}

#Create a list of VMKernel adapters

$management_vmkernel = Get-VMHostNetworkAdapter -VMHost $vmhost -Name "vmk0"

$vmotion1_vmkernel = Get-VMHostNetworkAdapter -VMHost $vmhost -Name "vmk1"

$vmotion2_vmkernel = Get-VMHostNetworkAdapter -VMHost $vmhost -Name "vmk2"

$vmkernel_list = @($management_vmkernel,$vmotion1_vmkernel,$vmotion2_vmkernel)

#Create mapping for VMKernel -> vss Port Group

$management_vmkernel_portgroup = Get-VirtualPortGroup -name "VSS_Mgmt" -Host $vmhost

$vmotion1_vmkernel_portgroup = Get-VirtualPortGroup -name "VSS_vMotion1" -Host $vmhost

$vmotion2_vmkernel_portgroup = Get-VirtualPortGroup -name "VSS_vMotion2" -Host $vmhost

$pg_array = @($management_vmkernel_portgroup,$vmotion1_vmkernel_portgroup,$vmotion2_vmkernel_portgroup)

#Move 1 uplink to the vss, also move over vmkernel interfaces

Write-Host "Moving vmnic0 from the vDS to VSS including vmkernel interfaces" -foregroundcolor Green

Add-VirtualSwitchPhysicalNetworkAdapter -VMHostPhysicalNic (Get-VMHostNetworkAdapter -Physical -Name "vmnic0" -VMHost $vmhost) -VirtualSwitch $vss_name -VMHostVirtualNic $vmkernel_list -VirtualNicPortgroup $pg_array -Confirm:$false

#Moving VM's from vDS to VSS

$vmlist = Get-VM | Where-Object {$_.VMHost.name -eq $vmhost}

foreach ($vm in $vmlist)

{

#VM's may have more that one nic

$vmniclist = Get-NetworkAdapter -vm $vm

foreach ($vmnic in $vmniclist)

{

$newportgroup = "VSS_" + $vmnic.NetworkName

Write-Host "Changing port group for" $vm.name "from" $vmnic.NetworkName "to " $newportgroup -foregroundcolor Green

Set-NetworkAdapter -NetworkAdapter $vmnic -NetworkName $newportgroup -Confirm:$false | Out-Null

}

}

#Moving additional vmnic to vss

Write-Host "All VM's migrated, adding second vmnic to vss" -foregroundcolor Green

Add-VirtualSwitchPhysicalNetworkAdapter -VMHostPhysicalNic (Get-VMHostNetworkAdapter -Physical -Name "vmnic1" -VMHost $vmhost) -VirtualSwitch $vss_name -Confirm:$false

#Tidyup - Remove DVS from this host

Write-Host "Removing host from vDS" -foregroundcolor Green

$vds | Remove-VDSwitchVMHost -VMHost $vmhost -Confirm:$false

The reverse

Although vSphere has some handy tools to migrate hosts, portgroups and networking to a vDS, scripting the reverse didn’t require many changes to the original script:

Write-Host "Connecting to vCenter Server" -foregroundcolor Green

Connect-VIServer -Server "vCenterServer" -User administrator@vsphere.local -Pass "somepassword" | Out-Null

# Individual ESXi host to migrate from vDS to VSS

$vmhost = "192.168.1.20"

Write-Host "Host selected: " $vmhost -foregroundcolor Green

#VDS to migrate to

$vds_name = "MyvDS"

$vds = Get-VDSwitch -Name $vds_name

#Vss to migrate from

$vss_name = "vSwitch_Migration"

$vss = Get-VirtualSwitch -Name $vss_name -VMHost $vmhost

#Add host to vDS but don't add uplinks yet

Write-Host "Adding host to vDS without uplinks" -foregroundcolor Green

Add-VDSwitchVMHost -VMHost $vmhost -VDSwitch $vds

#Create a list of VMKernel adaptors

$management_vmkernel = Get-VMHostNetworkAdapter -VMHost $vmhost -Name "vmk0"

$vmotion1_vmkernel = Get-VMHostNetworkAdapter -VMHost $vmhost -Name "vmk1"

$vmotion2_vmkernel = Get-VMHostNetworkAdapter -VMHost $vmhost -Name "vmk2"

$vmkernel_list = @($management_vmkernel,$vmotion1_vmkernel,$vmotion2_vmkernel)

#Create mapping for VMKernel -> vds Port Group

$management_vmkernel_portgroup = Get-VDPortgroup -name "Mgmt" -VDSwitch $vds_name

$vmotion1_vmkernel_portgroup = Get-VDPortgroup -name "vMotion0" -VDSwitch $vds_name

$vmotion2_vmkernel_portgroup = Get-VDPortgroup -name "vMotion1" -VDSwitch $vds_name

$vmkernel_portgroup_list = @($management_vmkernel_portgroup,$vmotion1_vmkernel_portgroup,$vmotion2_vmkernel_portgroup)

#Move 1 uplink to the vDS, also move over vmkernel interfaces

Write-Host "Moving vmnic0 from the vSS to vDS including vmkernel interfaces" -foregroundcolor Green

Add-VDSwitchPhysicalNetworkAdapter -VMHostPhysicalNic (Get-VMHostNetworkAdapter -Physical -Name "vmnic0" -VMHost $vmhost) -DistributedSwitch $vds_name -VMHostVirtualNic $vmkernel_list -VirtualNicPortgroup $vmkernel_portgroup_list -Confirm:$false

#Moving VM's from VSS to vDS

$vmlist = Get-VM | Where-Object {$_.VMHost.name -eq $vmhost}

foreach ($vm in $vmlist)

{

#VM's may have more that one nic

$vmniclist = Get-NetworkAdapter -vm $vm

foreach ($vmnic in $vmniclist)

{

$newportgroup = $vmnic.NetworkName.Replace("VSS_","")

Write-Host "Changing port group for" $vm.name "from" $vmnic.NetworkName "to " $newportgroup -foregroundcolor Green

Set-NetworkAdapter -NetworkAdapter $vmnic -Portgroup $newportgroup -Confirm:$false | Out-Null

}

}

#Moving additional vmnic to vds

Write-Host "All VM's migrated, adding second vmnic to vDS" -foregroundcolor Green

Add-VDSwitchPhysicalNetworkAdapter -VMHostPhysicalNic (Get-VMHostNetworkAdapter -Physical -Name "vmnic1" -VMHost $vmhost) -DistributedSwitch $vds_name -Confirm:$false

#Tidyup - Remove vSS from this host

Write-Host "Removing VSS from host" -foregroundcolor Green

Remove-VirtualSwitch -VirtualSwitch $vss -Confirm:$false